UCLA institute to help biologists, doctors mine ‘big data’

Millions upon millions of medical records and test results. Countless DNA sequences. Hard drives stuffed with images of all kinds — pictures of cells, scans of body parts. It’s all part of the deluge of information often known as “big data,” an ever-growing stockpile of digital material that scientists hope will reveal insights about biology and lead to improvements in medical care.

UCLA intends to position itself at the center of the effort.

The school’s new Institute for Quantitative and Computational Biosciences, which administrators are expected to announce Tuesday, will bring together researchers and computer experts from the medical school and other departments throughout the UCLA campus to help make sense of the data, university leaders said.

Analyzing big data might help scientists understand how genes interact with the environment to promote good health or cause disease, and provide a clearer understanding of which medical treatments work best for particular populations, or in particular circumstances.

“It’s figuring out the signals from the noise,” said UCLA Division of Life Sciences Dean Victoria Sork, who said that the university had invested $50 million altogether in the field of computational biosciences so far, hiring new faculty and improving facilities.

“UCLA has all these experts, but we were lacking the people and thinkers to say, how do we develop the tools to make the discoveries?” she said.

Sork said that administrators started working about five years ago on the idea for the institute, which will link big-data efforts in the life sciences across campus.

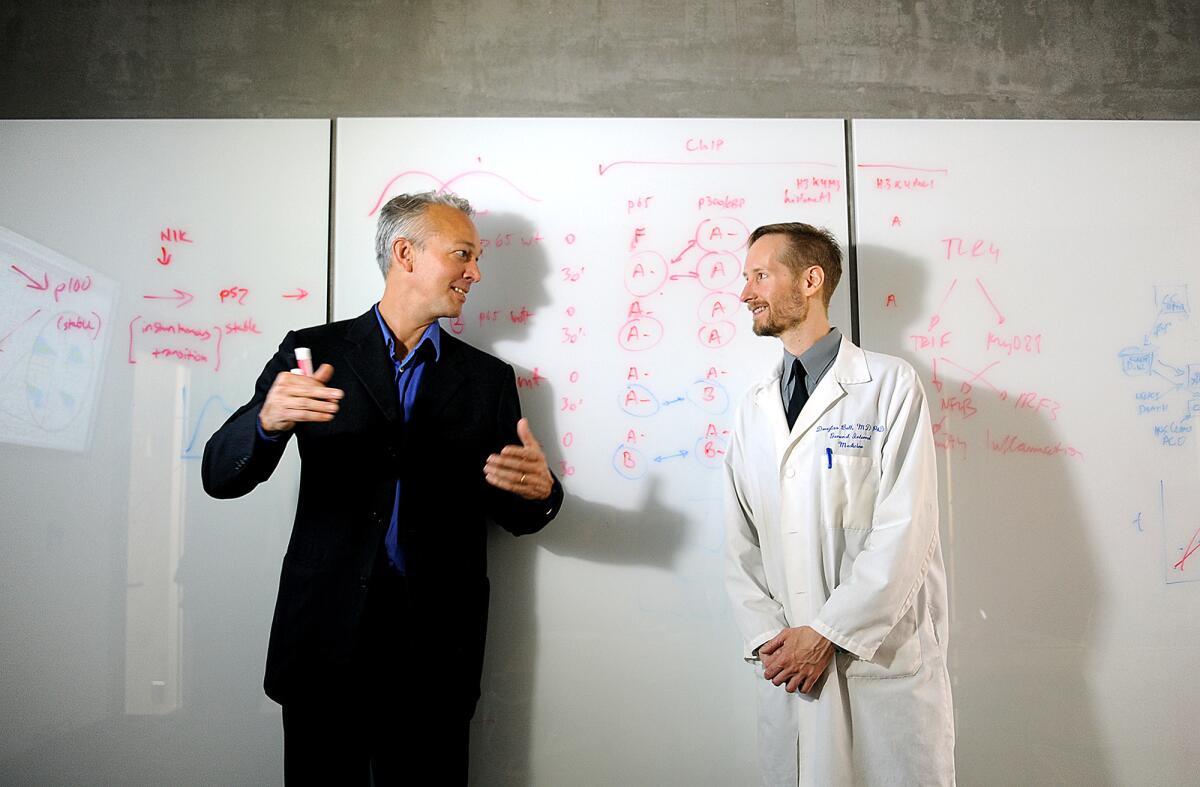

The research center will provide training for students and faculty members on how to work with large data sets, said Alexander Hoffmann, director of the institute and a professor of microbiology, immunology and molecular genetics at UCLA. It should also help scientists hear about potentially useful sources of data such as the University of California Research eXchange, which maintains a searchable database of 12 million electronic health records compiled from patients at the five UC medical centers (the patients’ identities are not revealed.)

The trouble with big data, Hoffmann said, isn’t volume. It’s complexity.

“I may have genetic data, or imaging data. I may have sleep-pattern data, or exercise data. I may have data on what people eat,” he said. “Figuring out how the pieces go together takes computer science.”

Many universities want to develop big-data expertise these days, said Philip E. Bourne, associate director for data science at the National Institutes of Health.

Bourne, a former professor at UC San Diego who has worked with Hoffmann in the past, said UCLA’s investment is significant and a regional first in integrating big data to solve biomedical problems.

In the Los Angeles area, both USC and UCLA recently received NIH grants to fund biomedical research using big data. USC has said it will spend $1 billion on big data, including many projects beyond biomedical research.

For more on health, follow me on Twitter: @LATerynbrown

More to Read

Sign up for Essential California

The most important California stories and recommendations in your inbox every morning.

You may occasionally receive promotional content from the Los Angeles Times.