Regulate social media? California still has a plan for that

- Share via

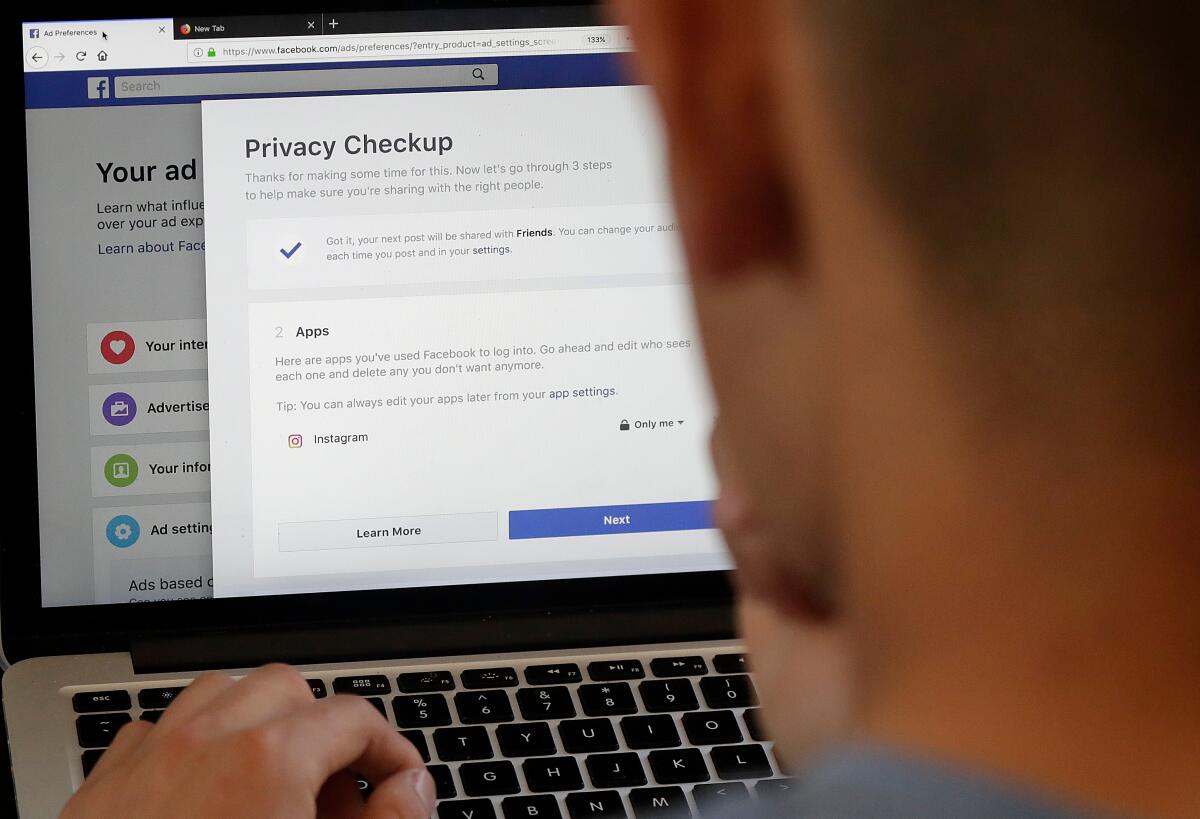

This month, a bill to regulate social media services for children was rejected by California’s Senate Appropriations Committee without explanation. The proposed legislation, sponsored by Assemblymembers Jordan Cunningham (R-Paso Robles) and Buffy Wicks (D-Oakland) and called the Social Media Platform Duty to Children Act, would have allowed the state attorney general and local prosecutors to sue social media companies for knowingly incorporating features into their products that addicted children. The powerful tech industry lobbied for months to defeat the bill.

But a companion bill spearheaded by the same lawmakers — the Age-Appropriate Design Code Act — survived the appropriations committee and may still become law.

Without the strong enforcement provisions in the Social Media Platform Duty to Children Act, some despair that the legislative effort was a waste of time. Progress, however, is made in increments. These legislative efforts have already achieved several gains.

California lawmakers on Thursday rejected a proposal that could have forced some popular social media platforms such as Instagram and TikTok to pay fines for using features they know can harm children.

First, they named the problem as one of corporate responsibility and reframed the public discussion around social media regulation.

Both bills identified social media addiction as a legitimate problem, and one caused by corporations. Social media companies and their defenders have repeatedly rejected responsibility for the addictive nature of their offerings. Facebook, for example, has denied reports that Instagram use harmed teenage girls and pushed back forcefully against claims that its platform harms users.

This corporate gaslighting effectively blames children for being addicted to social media and conveniently ignores how companies have intentionally designed their products to have addictive features, suggesting: My product isn’t the problem; it’s your kid. The bipartisan legislative effort rejected this view, legitimized parental concerns about social media addiction and reframed the issue as one of corporate responsibility and product liability instead of blaming the victim.

Second, these bills recognized that internet use implicates competing values and priorities and chipped away at Big Tech exceptionalism.

When faced with the prospect of any kind of online regulation, social media companies, old-school internet idealists and free-market zealots ring the same two alarm bells: Regulation will stifle free speech and impede tech innovation. For the past couple of decades or so, these twin bugbears have scared away legislators from imposing regulation with real teeth. But these arguments have multiple flaws.

Social media companies are not absolute protectors of free speech and already impose limits on the speech they distribute. Nor are they the only innovative businesses subject to regulation. The biotech industry, for example, must comply with regulations that promote safety and efficacy.

A focus only on so-called free speech and innovation further ignores that other important values can be threatened by an unregulated internet, such as privacy, autonomy and safety.

Until this month, it looked as though California might finally pass a bill that would hold the tech industry accountable for the harm caused by its products. But even though that enforcement legislation creating a new path for lawsuits has been killed, the fight isn’t over.

California lawmakers should advance legislation to make social media safer for children and keep pressure on Congress to craft a national fix.

The design legislation that’s still alive in the state Senate would require companies to implement common-sense privacy requirements such as stringent default settings and clear, concise terms of service. It would also require companies to assess the impact that their products have on children and prohibit features such as “dark patterns,” pop-ups and other elements of the product interface that encourage children to provide personal information.

These provisions are not just good for children — they’re good for all users. Critics who claim that the legislation would require age verification on all websites are missing the point. Companies should comply with these provisions regardless of the age of their users; age verification would be necessary only if they wish to engage in deceptive and harmful online practices with adult users.

The legislation would impose civil penalties of up to $2,500 or $7,500 per child depending on whether the violation is negligent or intentional. Although these penalties may be too small to financially damage companies such as Facebook (or technically, Meta), the bill stipulates that they will be used to offset the costs of regulation. Civil penalties also tend to have a public shaming effect, which may further erode the Teflon coating that has long protected the tech industry from regulation.

Moreover, the death of the companion bill empowering state lawsuits doesn’t preclude all civil lawsuits against social media companies. Although parents would not be able to sue under the design bill itself, the standards that develop in response to it may help parents in lawsuits brought against companies on the basis of product liability, tort or contract liability. Tech may face pressure from consumers too: Companies that fail to conform to prevailing business norms may be seen as negligent or reckless.

It’s now up to the state Senate to approve the common-sense design act that will help protect California’s children from addictive and toxic online environments.

Nancy Kim is a law professor at Chicago-Kent College of Law, Illinois Institute of Technology.

More to Read

A cure for the common opinion

Get thought-provoking perspectives with our weekly newsletter.

You may occasionally receive promotional content from the Los Angeles Times.