Company that sent AI calls mimicking Biden to New Hampshire voters agrees to $1-million fine

- Share via

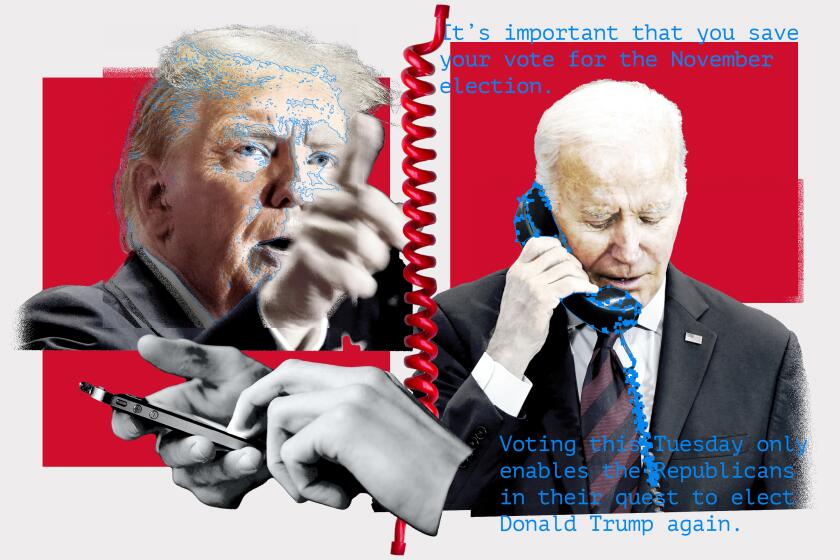

MEREDITH, N.H. — A company that sent deceptive phone messages to New Hampshire voters using artificial intelligence to mimic President Biden’s voice agreed Wednesday to pay a $1-million fine, federal regulators said.

Lingo Telecom, the voice service provider that transmitted the robocalls, agreed to the settlement to resolve enforcement action taken by the Federal Communications Commission, which had initially sought a $2-million fine.

The case is seen by many as an unsettling early example of how AI might be used to influence groups of voters and democracy as a whole.

Meanwhile, Steve Kramer, a political consultant who orchestrated the calls, still faces a proposed $6-million FCC fine as well as state criminal charges.

The phone messages were sent to thousands of New Hampshire voters on Jan. 21. They featured a voice similar to Biden’s falsely suggesting that voting in the state’s presidential primary would preclude them from casting ballots in the November general election.

AI is bending reality into a video game world of deepfakes to sow confusion and chaos during the 2024 election. Disinformation is a danger, especially in swing states.

Kramer, who paid a magician and self-described “digital nomad” to create the recording, told the Associated Press earlier this year that he wasn’t trying to influence the outcome of the primary, but rather, he wanted to highlight the potential dangers of AI and spur lawmakers into action.

If found guilty, Kramer could face a prison sentence of up to seven years on a charge of voter suppression and a sentence of up to one year on a charge of impersonating a candidate.

The FCC said that as well as agreeing to the civil fine, Lingo Telecom had agreed to strict caller ID authentication rules and requirements and to more thoroughly verify the accuracy of the information provided by its customers and upstream providers.

“Every one of us deserves to know that the voice on the line is exactly who they claim to be,” FCC Chair Jessica Rosenworcel said in a statement. “If AI is being used, that should be made clear to any consumer, citizen, and voter who encounters it. The FCC will act when trust in our communications networks is on the line.”

California lawmakers have advanced artificial intelligence proposals that would, among other things, protect jobs and outlaw deepfakes involving elections.

Lingo Telecom did not immediately respond to a request for comment. The company had earlier said it strongly disagreed with the FCC’s action, calling it an attempt to impose new rules retroactively.

Nonprofit consumer advocacy group Public Citizen commended the FCC on its action. Co-President Robert Weissman said Rosenworcel got it “exactly right” by saying consumers have a right to know when they are receiving authentic content and when they are receiving AI-generated deepfakes. Weissman said the case illustrates how such deepfakes pose “an existential threat to our democracy.”

FCC Enforcement Bureau Chief Loyaan Egal said the combination of caller ID spoofing and generative AI voice-cloning technology posed a significant threat “whether at the hands of domestic operatives seeking political advantage or sophisticated foreign adversaries conducting malign influence or election interference activities.”

Perry writes for the Associated Press.

More to Read

Sign up for Essential California

The most important California stories and recommendations in your inbox every morning.

You may occasionally receive promotional content from the Los Angeles Times.